Williams’s wrongful arrest, which was first reported by the New York Times in August 2020, was based on a bad match from the Detroit Police Department’s facial recognition system. Two more instances of false arrests have since been made public. Both are also Black men, and both have taken legal action to try rectifying the situation.

Now Williams is following in their path and going further—not only by suing the Detroit Police for his wrongful arrest, but by trying to get the technology banned.

On Tuesday, the ACLU and the University of Michigan Law School’s Civil Rights Litigation Initiative filed a lawsuit on behalf of Williams, alleging that his arrest violated Williams’s Fourth Amendment rights and was in defiance of Michigan’s civil rights law.

The suit requests compensation, greater transparency about the use of facial recognition, and that the Detroit Police Department stop using all facial recognition technology, either directly or indirectly.

What the lawsuit says

The documents filed on Tuesday lay out the case. In March 2019, the DPD had run a grainy photo of a Black man with a red cap from Shinola’s surveillance video through its facial recognition system, made by a company called DataWorks Plus. The system returned a match with an old driver’s license photo of Williams. Investigating officers then included William’s license photo as part of a photo line-up, and the Shinola security guard identified William’s as the thief. The officers obtained a warrant, which requires multiple sign offs from department leadership, and Williams was arrested.

The complaint argues that the false arrest of Williams was a direct result of the facial recognition system, and that “this wrongful arrest and imprisonment case exemplifies the grave harm caused by the misuse of, and reliance upon, facial recognition technology.”

The case contains four counts, three of which focus on the lack of probable cause for the arrest while one count focuses on the racial disparities in facial recognition. “By employing technology that is empirically proven to misidentify Black people at rates far higher than other groups of people,” it states, ”the DPD denied Mr. Williams the full and equal enjoyment of the Detroit Police Department’s services, privileges, and advantages because of his race or color.”

Facial recognition’s difficulties in identifying darker-skinned people is well-documented. After the killing of George Floyd in Minneapolis in 2020, some cities and states announced bans and moratoriums on the police use of facial recognition. But many others, including Detroit, continued to use it despite growing concerns.

“Relying on subpar images”

When MIT Technology review spoke with Williams’s ACLU lawyer, Phil Mayor, last year, he stressed that problems of racism within American law enforcement made the use of facial recognition even more concerning.

“This isn’t a one bad actor situation,” Mayor said. “This is a situation in which we have a criminal legal system that is extremely quick to charge, and extremely slow to protect people’s rights, especially when we’re talking about people of color.”

Eric Williams, a senior staff attorney at the Economic Equity Practice in Detroit, says cameras have many technological limitations, not least that they are hardcoded with color ranges for recognizing skin tone and often simply cannot process darker skin.

“I think every Black person in the country has had the experience of being in a photo and the picture turns up either way lighter or way darker.”

“I think every Black person in the country has had the experience of being in a photo and the picture turns up either way lighter or way darker,” says Williams, who is a member of the ACLU of Michigan’s lawyers committee, but is not working on the Robert Williams case. “Lighting is one of the primary factors when it comes to the quality of an image. So the fact that law enforcement is relying, to some degree… on really subpar images is problematic.”

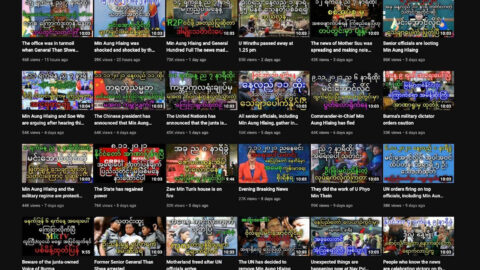

There have been cases that challenged biased algorithms and artificial intelligence technologies on the basis of race. Facebook, for example, underwent a massive civil rights audit after its targeted advertising algorithms were found to serve ads on the basis of race, gender and religion. YouTube was sued in a class action lawsuit by Black creators who alleged that it’s AI systems profile users and censor and discriminate content based on race. YouTube was also sued by LGBTQ+ creators who said that content moderation systems flagged the words “gay” and “lesbian”.

Some experts say it was only a matter of time until the use of biased technology in a major institution like the police was met with legal challenges.

Recent Comments