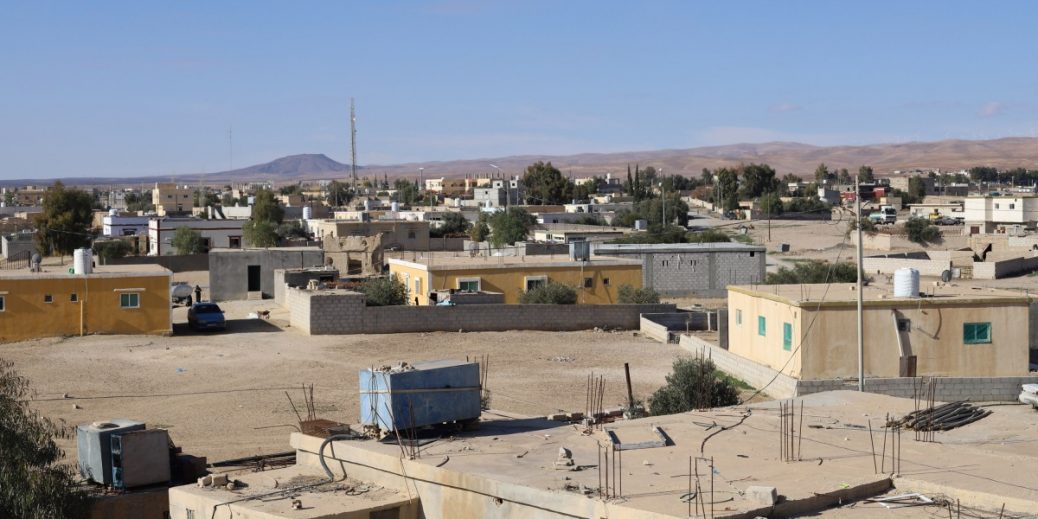

The news: An algorithm funded by the World Bank to determine which families should get financial assistance in Jordan likely excludes people who should qualify, an investigation from Humans Rights Watch has found.

Why it matters: The organization identified several fundamental problems with the algorithmic system that resulted in bias and inaccuracies. It ranks families applying for aid from least poor to poorest using a secret calculus that assigns weights to 57 socioeconomic indicators. Applicants say that the calculus is not reflective of reality, and oversimplifies people’s economic situation.

The bigger picture: AI ethics researchers are calling for more scrutiny around the increasing use of algorithms in welfare systems. One of the report’s authors says its findings point to the need for greater transparency into government programs that use algorithmic decision-making. Read the full story.

—Tate Ryan-Mosley

We are all AI’s free data workers

The fancy AI models that power our favorite chatbots require a whole lot of human labor. Even the most impressive chatbots require thousands of human work hours to behave in a way their creators want them to, and even then they do it unreliably.

Human data annotators give AI models important context that they need to make decisions at scale and seem sophisticated, often working at an incredibly rapid pace to meet high targets and tight deadlines. But, some researchers argue, we are all unpaid data laborers for big technology companies, whether we are aware of it or not. Read the full story.

Recent Comments