Human babies are far better at learning than even the very best large language models. To be able to write in passable English, ChatGPT had to be trained on massive data sets that contain millions upon millions of words. Children, on the other hand, have access to only a tiny fraction of that data, yet by age three they’re communicating in quite sophisticated ways.

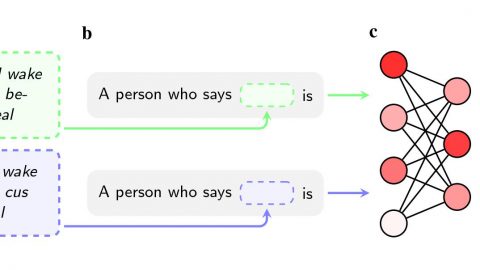

A team of researchers at New York University wondered if AI could learn like a baby. What could an AI model do when given a far smaller data set—the sights and sounds experienced by a single child learning to talk?

A lot, it turns out. This work, published in Science, not only provides insights into how babies learn but could also lead to better AI models. Read the full story.

—Cassandra Willyard

The next generation of mRNA vaccines is on its way

Japan recently approved a new mRNA vaccine for covid, and this one is pretty exciting. Just like the mRNA vaccines you know and love, it delivers the instructions for making the virus’s spike protein. But here’s what makes it novel: it also tells the body how to make more mRNA. Essentially, it’s self-amplifying.

These kinds of vaccines offer a couple of important advantages over conventional mRNA vaccines, at least in theory. The dose can be much lower, and it’s possible that they will induce a more durable immune response.

Recent Comments